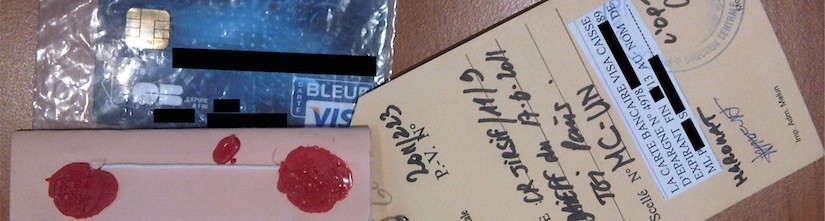

Chip and PIN was designed to prevent fraud, but it also created a new opportunity for criminals that is taking retailers by surprise. Known as “forced authorisation”, committing the fraud requires no special equipment and when it works, it works big: in one transaction a jewellers store lost £20,500. This type of fraud is already a problem in the UK, and now that US retailers have made it through the first Black Friday since the Chip and PIN deadline, criminals there will be looking into what new fraud techniques are available.

The fraud works when the retailer has a one-piece Chip and PIN terminal that’s passed between the customer and retailer during the course of the transaction. This type of terminal is common, particularly in smaller shops and restaurants. They’re a cheaper option compared to terminals with a separate PIN pad (at least until a fraud happens).

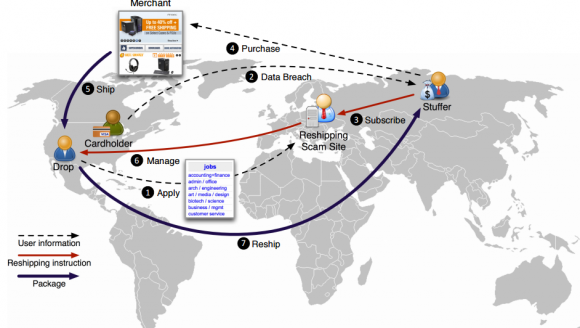

The way forced authorisation fraud works is that the retailer sets up the terminal for a transaction by inserting the customer’s card and entering the amount, then hands the terminal over to the customer so they can type in the PIN. But the criminal has used a stolen or counterfeit card, and due to the high value of the transaction the terminal performs a “referral” — asking the retailer to call the bank to perform additional checks such as the customer answering a security question. If the security checks pass, the bank will give the retailer an authorisation code to enter into the terminal.

The problem is that when the terminal asks for these security checks, it’s still in the hands of the criminal, and it’s the criminal that follows the steps that the retailer should have. Since there’s no phone conversation with the bank, the criminal doesn’t know the correct authorisation code. But what surprises retailers is that the criminal can type in anything at this stage and the transaction will go through. The criminal might also be able to bypass other security features, for example they could override the checking of the PIN by following the steps the retailer would if the customer has forgotten the PIN.

By the time the terminal is passed back to the retailer, it looks like the transaction was completed successfully. The receipt will differ only very subtly from that of a normal transaction, if at all. The criminal walks off with the goods and it’s only at the end of the day that the authorisation code is checked by the bank. By that time, the criminal is long gone. Because some of the security checks the bank asked for weren’t completed, the retailer doesn’t get the money.

Continue reading Forced authorisation chip and PIN scam hitting high-end retailers

As a child,

As a child,