For years, the world of cryptocurrency has been synonymous with cutting-edge digital security and the constant threat of sophisticated cyberattacks. The community has honed its skills in protecting its assets from malware, fraudsters, and cybercriminals. But what if the biggest threat to your cryptocurrency is not lurking in the digital shadows, but right outside your door, wielding something as primitive as a “wrench”?

Our paper, “Investigating Wrench Attacks: Physical Attacks Targeting Cryptocurrency Users,” published in the Advances in Financial Technology Conference (AFT 2024), shatters the illusion that cryptocurrency crime is purely an online phenomenon. It exposes a deeply unsettling reality: physical “wrench attacks”, crimes where perpetrators use force or the threat of force to steal cryptocurrencies. These attacks are violent, underreported and alarmingly effective. In recent months, the cryptocurrency ecosystem has been shaken by a surge of serious and violent wrench attacks, including kidnappings and murders. An example is the recent kidnapping of David Ballad, the co-founder of Ledger, the famous cryptocurrency hardware wallet company. Wrench attacks have existed since Bitcoin’s early days, even affecting prominent figures in the space like Hal Finney.

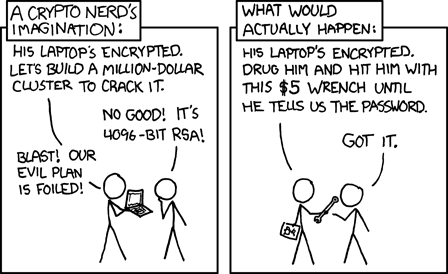

Beyond the Keyboard: What is a $5 Wrench Attack?

The term “wrench attack” originated from a popular XKCD webcomic, depicting a scenario where a physical threat (a $5 wrench) is used to extract information from a victim’s computer, bypassing complicated digital security without the need for technical efforts. In the context of cryptocurrency, these attacks are precisely that: old-school physical assaults or threats targeting cryptocurrency owners to illegally seize their assets or the means to access them. In our paper, we propose the first formal legal definition of the attack, in addition to its crime elements as per criminal law norms, to help identify the precise scope and measurement of the attack.

What makes these attacks so distinct and perilous compared to any other cryptocurrency crime?

Continue reading $5 Wrench Attacks: When Cryptocurrency Crime Get Physical