Online accounts enable us to store and access documents, make purchases, and connect to new friends, among many other capabilities. Even though online accounts are convenient to use, they also expose users to risks such as inadvertent disclosure of private information and fraud. In recent times, data breaches and subsequent exposure of users to attacks have become commonplace. For instance, over the last four years, account credentials of millions of users from Dropbox, Yahoo, and LinkedIn have been stolen in massive attacks conducted by cybercriminals.

After online accounts are compromised by cybercriminals, what happens to the accounts? In our paper, presented today at the 2016 ACM Internet Measurement Conference, we answer this question. To do so, we needed to monitor the compromised accounts. This is hard to do, since only large online service providers have access to data from such compromised accounts, for instance Google or Yahoo. As a result, there is sparse research literature on the use of compromised online accounts. To address this problem, we developed an infrastructure to monitor the activity of attackers on Gmail accounts. We did this to enable researchers to understand what happens to compromised webmail accounts in the wild, despite the lack of access to proprietary data on compromised accounts.

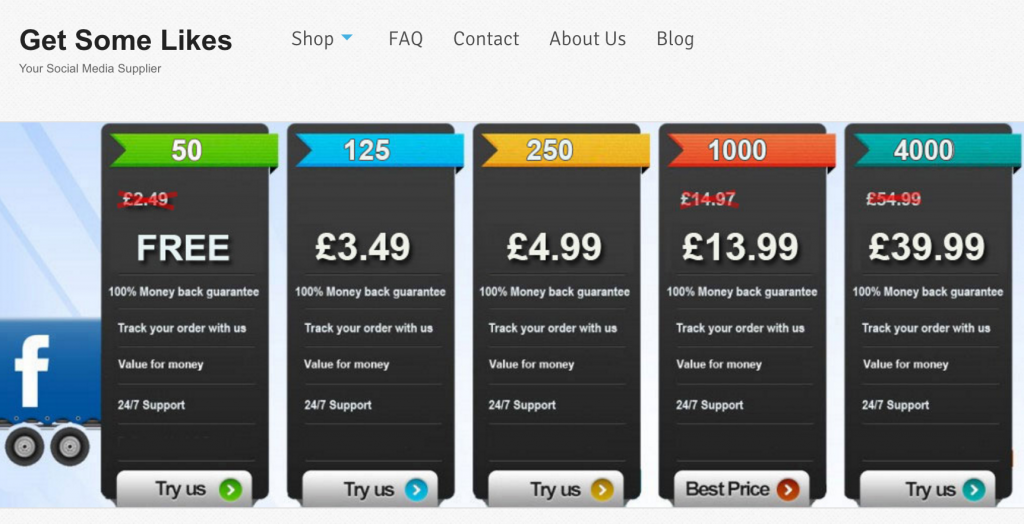

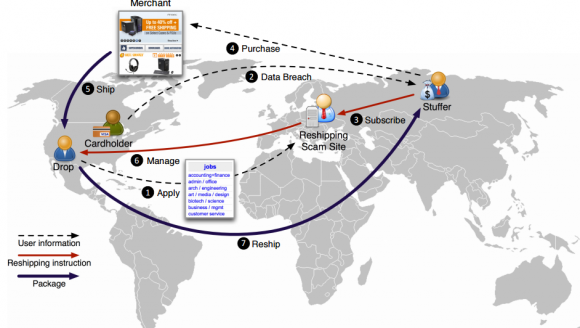

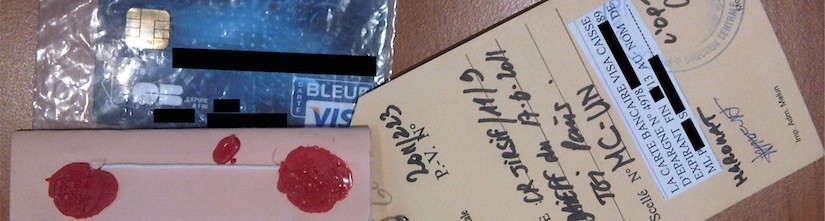

Cybercriminals usually sell the stolen credentials on the underground black market or use them privately, depending on the value of the compromised accounts. Such accounts can be used to send spam messages to other online online accounts, or to retrieve sensitive personal or corporate information from the accounts, among a myriad of malicious uses. In the case of compromised webmail accounts, it is not uncommon to find password reset links, financial information, and authentication credentials of other online accounts inside such webmail accounts. This makes webmail accounts particularly attractive to cybercriminals, since they often contain a lot of sensitive information that could potentially be used to compromise other accounts. For this reason, we focus on webmail accounts.

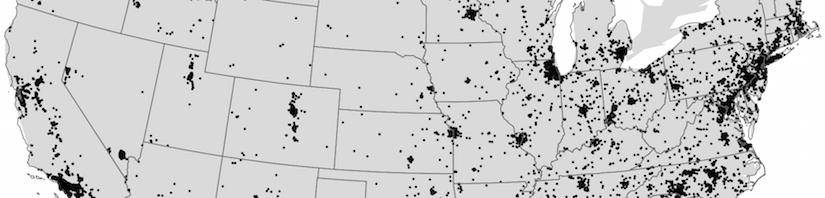

Our infrastructure works as follows. We embed scripts based on Google Apps Script in Gmail accounts, so that the accounts send notifications of activity to us. Such activity includes the opening of email messages, creation of email drafts, sending of email messages, and “starring” of email messages. We also record details of accesses including IP addresses, browser information, and access times of visitors to the accounts. Since we designed the Gmail accounts to lure cybercriminals to interact with them (in the sense of a honeypot system), we refer to the accounts as honey accounts.

To study webmail accounts stolen via malware, we also developed a malware sandbox infrastructure that executes information-stealing malware samples inside virtual machines (VMs). We supply honey credentials to the VMs, which drive web browsers and login to the honey accounts automatically. The login action triggers the malware in the VMs to steal and exfiltrate the honey credentials to Command-and-Control servers under the control of botmasters.

Continue reading Understanding the Use of Leaked Webmail Credentials in the Wild