In this post, we discuss a new zk-SNARK, Sonic, developed by Mary Maller, Sean Bowe, Markulf Kohlweiss and Sarah Meiklejohn. Unlike other SNARKs, Sonic does not require a trusted setup for each circuit, but only a single setup for all circuits. Further, the setup for Sonic never has to end, so it can be continuously secured by accumulating more contributions. This property makes it ideal for any system where there is not a trusted party, and there is a need to validate data without leaking confidential information. For example, a company might wish to show solvency to an auditor without revealing which products they have invested in. The construction is highly practical.

More about zk-SNARKs

Like all other zero-knowledge proofs, zk-SNARKs are a tool used to build applications where users must prove the validity of their data, such as in verifiable computation or anonymous credentials. Additionally, zk-SNARKs have the smallest proof sizes and verifier time out of all other known techniques for building zero-knowledge proofs. However, they typically require a trusted setup process, introducing the possibility of fraudulent data being input by the actors that implemented the system. For example, Zcash uses zk-SNARKs to send private cryptocurrency transactions, and if their setup was compromised then a small number of users could generate an unlimited supply of currency without detection.

| Characteristics of zk-SNARKs |

🙂 Can be used to build many cryptographic protocols

🙂 Very small proof sizes

🙂 Very fast verifier time

😐 Average prover time

☹️ Requires a trusted setup

☹️ Security assumes non-standard cryptographic assumptions |

In 2018, Groth et al. introduced a zk-SNARK that could be built from an updatable and universal setup. We describe these properties below and claim that these properties help mitigate the security concerns around trusted setup. However, unlike Sonic, Groth et al.’s setup outputs a large set of global parameters (in the order of terabytes), which would be unwieldy to store, update and verify.

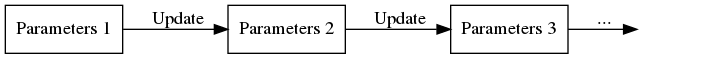

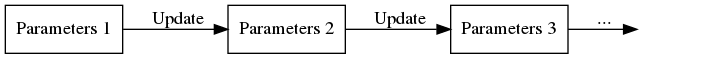

Updatability

Updatability means that any user, at any time, can update the parameters, including after the system goes live. After a single honest user has participated, no party can prove fraudulent data. This property means that a distrustful user could update the parameters themselves and have personal confidence in the parameters from that point forward. The update proofs are short and quick to verify.

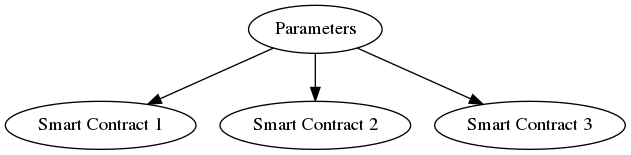

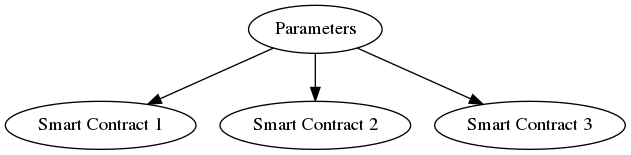

Universality

Universality means that the same parameters can be used for any application using this zk-SNARK. Thus one can imagine including the global parameters in an open-source implementation, or one could use the same parameters for all smart contracts in Ethereum.

Why Use Sonic?

Sonic is universal, updatable, and has a small set of global parameters (in the order of megabytes). Proof sizes are small (256 bytes) and verifier time is competitive with the fastest zk-SNARKs in the literature. It is especially well suited to systems where the same zk-SNARK is run by many different provers and verified by many different parties. This is exactly the situation for many blockchain systems.

Continue reading Introducing Sonic: A Practical zk-SNARK with a Nearly Trustless Setup