In this post, we discuss our new zero-knowledge proving system, Marlin, by Chiesa, Hu, Maller, Mishra, Vesely, and Ward. This year has been the year of the universal SNARK, with Sonic, Libra, and Plonk all bidding for attention. Marlin is yet another competitor, one which we recommend using when you require fast verification time without the use of batching.

Why Universal SNARKs?

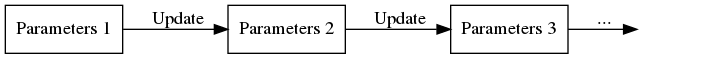

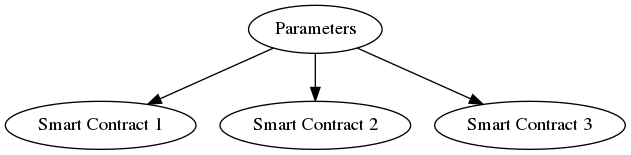

A universal SNARK is a proving system in which a single trusted setup suffices to prove anything that we know how to prove. That means that the same setup could be used across all applications and that parameters could be stored in a general-purpose library. Additionally, these universal SNARKs typically have relatively easy to coordinate setup procedures, which makes it easier to convince users that the procedure has been carried out correctly and securely.

Some SNARKs avoid setup procedures altogether. Such works include Spartan, Halo, and Hyrax. However, the cost of avoiding a trusted setup can generally be seen in the proof sizes and verification time.

Marlin or Sonic?

In this authors’ humble opinion, Sonic is fabulous. The proofs are small, the provers are fast, and the verification is fast provided one is verifying many proofs at the same time. For applications that use batched verifications, Sonic currently remains the state-of-the-art. Cryptocurrency transactions are a classic example of this – nodes can verify all the transactions in a new block simultaneously (provided the miner aggregates the transactions). However, this setting in which Sonic excels, i.e. the setting in which the verifier is not just given a single proof but many, many proofs of the same thing, is not always a given. For an example where Sonic’s batched proofs would not suffice, consider a randomness beacon. Here verification of the beacons outputs is only done once in a while. Therefore it would be a setting where batching is totally inappropriate.

Continue reading A Marlin is One of the Fastest SNARKs in the Ocean