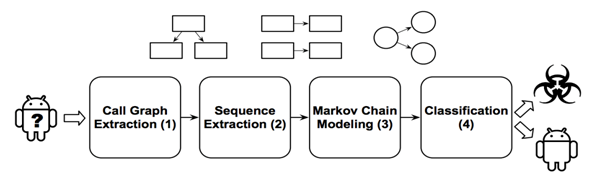

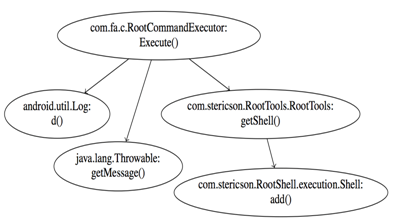

In this blog post, we will describe and comment on TESSERACT, a system introduced in a paper to appear at USENIX Security 2019, and previously published as a pre-print. TESSERACT is a publicly available framework for the evaluation and comparison of systems based on statistical classifiers, with a particular focus on Android malware classification. The authors used DREBIN and our MaMaDroid paper as examples of this evaluation. Their choice is because these are two of the most important state-of-the-art papers, tackling the challenge from different angles, using different models, and different machine learning algorithms. Moreover, DREBIN has already been reproduced by researchers even though the code is not available anymore; MaMaDroid’s code is publicly available (the parsed data and the list of samples are available under request). I am one of MaMaDroid’s authors, and I am particularly interested in projects like TESSERACT. Therefore, I will go through this interesting framework and attempt to clarify a few misinterpretations made by the authors about MaMaDroid.

The need for evaluation frameworks

The information security community and, in particular, the systems part of it, feels that papers are often rejected based on questionable decisions or, on the other hand, that papers should be more rigorous, trying to respect certain important characteristics. Researchers from Dutch universities published a survey of papers published to top venues in 2010 and 2015 where they evaluated if these works were presenting “crimes” affecting completeness, relevancy, soundness, and reproducibility of the work. They have shown how the newest publications present more flaws. Even though the authors included their works in the analyzed ones and did not word the paper as a wall of shame by pointing the finger against specific articles, the paper has been seen as an attack to the community rather than an incitement to produce more complete papers. To the best of my knowledge, unfortunately, the paper has not yet been accepted for publication. TESSERACT is another example of researchers’ effort in trying to make the community work more rigorous: most system papers present accuracies that are close to 100% in all the tests done; however, when some of them have been tested on different datasets, their accuracy was worse than a coin toss.

These two works are part of a trend that I personally find important for our community, to allow works that are following other ones on the chronological aspects to be evaluated in a more fair way. I explain with a personal example: I recall when my supervisor told me that at the beginning he was not optimistic about MaMaDroid being accepted at the first attempt (NDSS 2017) because most of the previous literature shows results always over 98% accuracy and that gap of a few percentage points can be enough for some reviewers to reject. When we asked an opinion of a colleague about the paper, before we submitted it for peer-review, this was his comment on the ML part: “I actually think the ML part is super solid, and I’ve never seen a paper with so many experiments on this topic.” We can see completely different reactions over the same specific part of the work.

TESSERACT

The goal of this post is to show TESSERACT’s potential while pointing out the small misinterpretations of MaMaDroid present in the current version of the paper. The authors contacted us to let us read the paper and see whether there has been any misinterpretation. I had a constructive meeting with the authors where we also had the opportunity to exchange opinions on the work. Following the TESSERACT description, there will be a section related to MaMaDroid’s misinterpretations in the paper. The authors told me that the newest versions would be updated according to what we discussed.

Continue reading TESSERACT’s evaluation framework and its use of MaMaDroid