This morning, BBC Rip Off Britain focused on push payment fraud, featuring an interview with me (starts at 34:20). The distinction between push and pull payments should be a matter for payment system geeks, and certainly isn’t at the front of customers’ minds when they make a payment. However, there’s a big difference when there’s fraud – for online pull payments (credit and debit card) the bank will give the victim the money back in many situations; for online push payments (Faster Payment System and Standing Orders) the full liability falls on the party least able to protect themselves – the customer.

The banking industry doesn’t keep good statistics about push payment fraud, but it appears to be increasing, with Which receiving reports from over 650 victims in the first two weeks of November 2016, with losses totalling over £5.5 million. Today’s programme puts a human face to these statistics, by presenting the case of Jane and Steven Caldwell who were defrauded of over £100,000 from their Nationwide and NatWest accounts.

They were called up at the weekend by someone who said he was working for NatWest. To verify that this was the case, Jane used three methods. Firstly, she checked caller-ID to confirm that the number was indeed the bank’s own customer helpline – it was. Secondly, she confirmed that the caller had access to Jane’s transaction history – he did. Thirdly, she called the bank’s customer helpline, and the caller knew this was happening despite the original call being muted.

Convinced by these checks, Jane transferred funds from her own accounts to another in her own name, having been told by the caller that this was necessary to protect against fraud. Unfortunately, the caller was a scammer. Experts featured on the programme suspect that caller-ID was spoofed (quite easy, due to lack of end-to-end security for phone calls), and that malware on Jane’s laptop allowed the scammer to see transaction history on her screen, as well as to listen to and see her call to the genuine customer helpline through the computer’s microphone and webcam. The bank didn’t check that the name Jane gave (her own) matched that of the recipient account, so the scammer had full access to the transferred funds, which he quickly moved to other accounts. Only Nationwide was able to recover any money – £24,000 – leaving Jane and Steven over £75,000 out of pocket.

Neither bank offered Jane and Steven a refund, because they classed the transaction as “authorised” and so falling into one of the exceptions to the EU Payment Services Directive requirement to refund victims of fraud (the other exception being if the bank believed the customer acted either with gross negligence or fraudulently). The banks argued that their records showed that the customer’s authentication device was used and hence the transaction was “authorised”. In the original draft of the Payment Services Directive this argument would not be sufficient, but as a result of concerted lobbying by Barclays and other UK banks for their records to be considered conclusive, the word “necessarily” was inserted into Article 72, and so removing this important consumer protection.

“Where a payment service user denies having authorised an executed payment transaction, the use of a payment instrument recorded by the payment service provider, including the payment initiation service provider as appropriate, shall in itself not necessarily be sufficient to prove either that the payment transaction was authorised by the payer or that the payer acted fraudulently or failed with intent or gross negligence to fulfil one or more of the obligations under Article 69.”

Clearly the fraudulent transactions do not meet any reasonable definition of “authorised” because Jane did not give her permission for funds to be transferred to the scammer. She carried out the transfer because the way that banks commonly authenticate themselves to customers they call (proving that they know your account details) was unreliable, because the recipient bank didn’t check the account name, because bank fraud-detection mechanisms didn’t catch the suspicious nature of the transactions, and because the bank’s authentication device is too confusing to use safely. When the security of the payment system is fully under control of the banks, why is the customer held liable when a person acting with reasonable care could easily do the same as Jane?

Another question is whether banks do enough to recover funds lost through scams such as this. The programme featured an interview with barrister Gideon Roseman who quickly obtained court orders allowing him to recover most of his funds lost through a similar scam. Interestingly a side-effect of the court orders was that he discovered that his bank, Barclays, waited more than 24 hours after learning about the fraud before they acted to stop the stolen money being transferred out. After being caught out, Barclays refunded Gideon the affected funds, but in cases where the victim isn’t a barrister specialising in exactly these sorts of disputes, do the banks do all they could to recover stolen money?

In order to give banks proper incentives to prevent push payment fraud where possible and to recover stolen funds in the remainder of cases, Which called for the Payment Systems Regulator to make banks liable for push payment fraud, just as they are for pull payments. I agree, and expect that if this were the case banks would implement innovative fraud prevention mechanisms against push payment fraud that we currently only see for credit and debit transactions. I also argued that in implementing the revised Payment Service Directive, the European Banking Authority should require banks provide evidence that a customer was aware of the nature of the transaction and gave informed consent before they can hold the customer liable. Unfortunately, both the Payment Systems Regulator, and the European Banking Authority conceded to the banking industry’s request to maintain the current poor state of consumer protection.

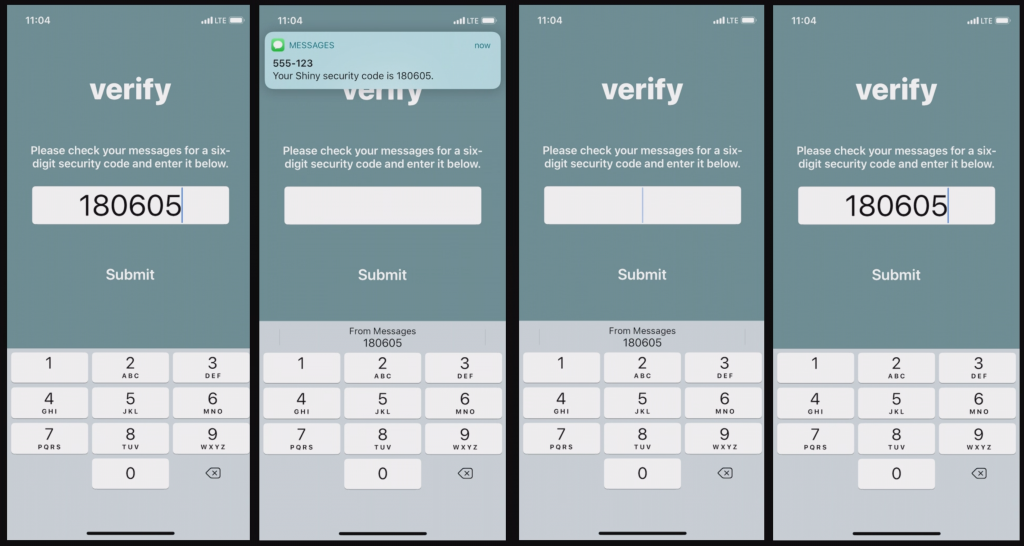

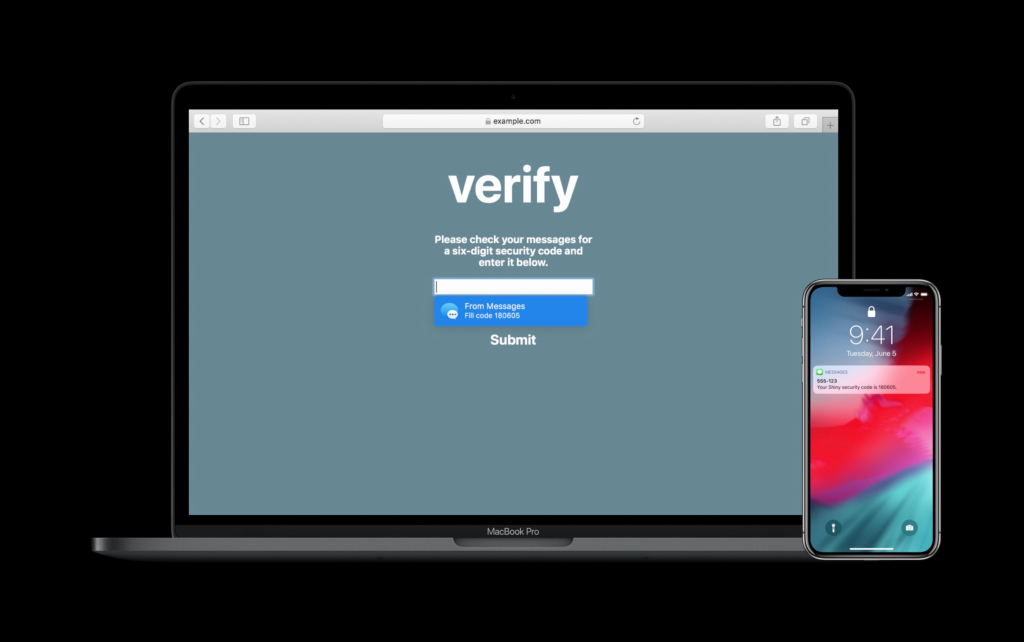

The programme concluded with security advice, as usual. Some was actively misleading, such as the claim by NatWest that banks will never ask customers to transfer money between their accounts for security reasons. My bank called me to transfer money from my current account to savings account, for precisely this reason (I called them back to confirm it really was them). Some advice was vague and not actionable (e.g. “be vigilant” – in response to a case where the victim was extremely cautious and still got caught out). Probably the most helpful recommendation is that if a bank supposedly calls you, wait 5 minutes and call them back using the number on a printed statement or card, preferably from a different phone. Alternatively stick to using cheques – they are slow and banks discourage their use (because they are expensive for them to process), but are much safer for the customer. However, such advice should not be considered an alternative to pushing liability back where it belongs – the banks – which will not only reduce fraud but also protect vulnerable customers.