In the run-up to this year’s Privacy Enhancing Technologies Symposium (PETS 2019), I noticed some decidedly non-privacy-enhancing behaviour. Transport for London (TfL) announced they will be tracking the wifi MAC addresses of devices being carried on London Underground stations. Before storing a MAC address it will be hashed with a key, but since this key will remain unchanged for an extended period (2 years), it will be possible to track the movements of an individual over this period through this pseudonymous ID. These traces are likely enough to link records back to the individual with some knowledge of that person’s distinctive travel plans. Also, for as long as the key is retained it would be trivial for TfL (or someone who stole the key) to convert the someone’s MAC address into its pseudonymised form and indisputably learn that that person’s movements.

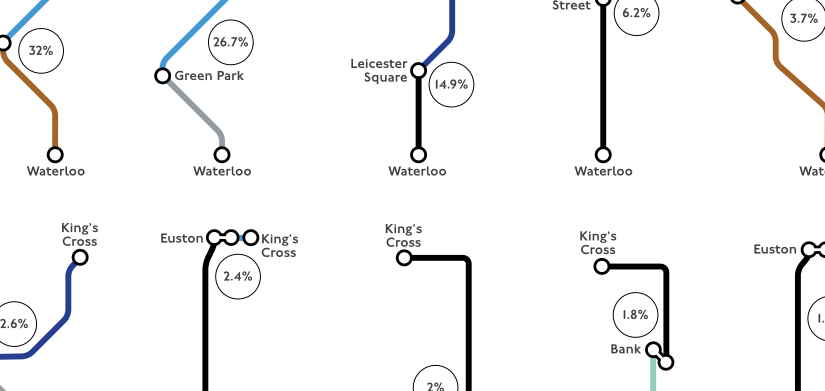

TfL argues that under the General Data Protection Regulations (GDPR), they don’t need the consent of individuals they monitor because they are acting in the public interest. Indeed, others have pointed out the value to society of knowing how people typically move through underground stations. But the GDPR also requires that organisations minimise the amount of personal data they collect. Could the same goal be achieved if TfL irreversibly anonymised wifi MAC addresses rather than just pseudonymising them? For example, they could truncate the hashed MAC address so that many devices all have the same truncated anonymous ID. How would this affect the calculation of statistics of movement patterns within underground stations? I posed these questions in a presentation at the PETS 2019 rump session, and in this article, I’ll explain why a set of algorithms designed to violate people’s privacy can be applied to collect wifi mobility information while protecting passenger privacy.

It’s important to emphasise that TfL’s goal is not to track past Underground customers but to predict the behaviour of future passengers. Inferring past behaviours from the traces of wifi records may be one means to this end, but it is not the end in itself, and TfL creates legal risk for itself by holding this data. The inferences from this approach aren’t even going to be correct: wifi users are unlikely to be typical passengers and behaviour will change over time. TfL’s hope is the inferred profiles will be useful enough to inform business decisions. Privacy-preserving measurement techniques should be judged by the business value of the passenger models they create, not against how accurate they are at following individual passengers around underground stations in the past. As the saying goes, “all models are wrong, but some are useful”.

Simulating privacy-preserving mobility measurement

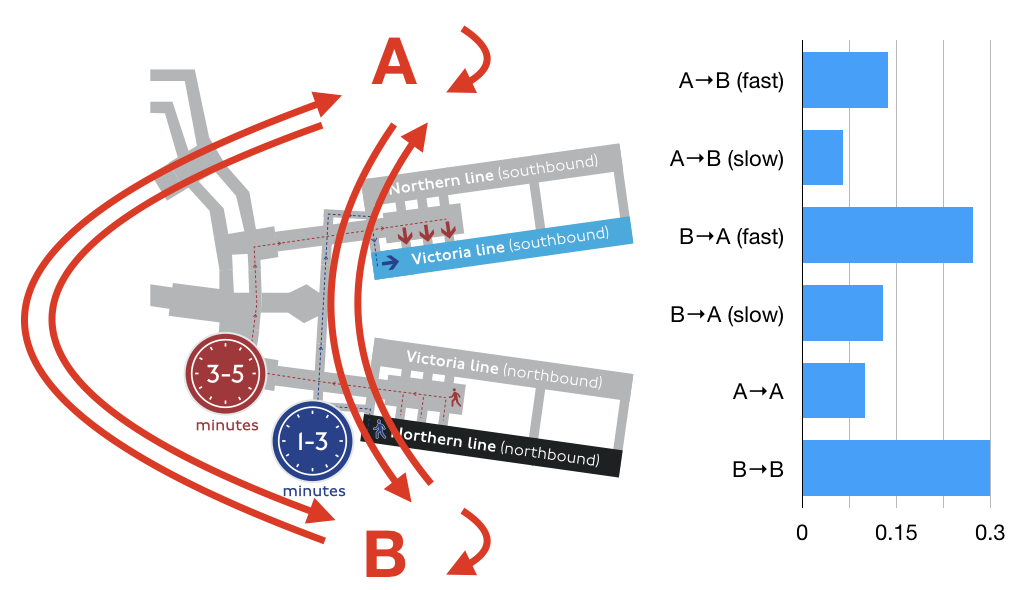

To explore this space, I built a simple simulation of Euston Station inspired by one of the TfL case studies. In my simulation, there are two platforms (A and B) and six types of passengers. Some travel from platform A to B; some from B to A; others enter and leave the station at one platform (A or B). Of the passengers that travel between platforms, they can take either the fast route (taking 2 minutes on average) or the slow route (taking 4 minutes on average). Passengers enter the station at a Poisson arrival rate averaging one per second. The probabilities that each new passenger is of a particular type are shown in the figure below. The goal of the simulation is to infer the number of passengers of each type from observations of wifi measurements taken at platforms A and B.