Thanks to their resilience, integrity, and transparency properties, blockchains have gained much traction recently, with applications ranging from banking and energy sector to legal contracts and healthcare. Blockchains initially received attention as Bitcoin’s underlying technology. But for all its success as a popular cryptocurrency, Bitcoin suffers from scalability issues: with a current block size of 1MB and 10 minute inter-block interval, its throughput is capped at about 7 transactions per second, and a client that creates a transaction has to wait for about 10 minutes to confirm that it has been added to the blockchain. This is several orders of magnitude slower that what mainstream payment processing companies like Visa currently offer: transactions are confirmed within a few seconds, and have ahigh throughput of 2,000 transactions per second on average, peaking up to 56,000 transactions per second. A reparametrization of Bitcoin can somewhat assuage these issues, increasing throughput to to 27 transactions per second and 12 second latency. Smart contract platforms, such as Ethereum inherit those scalability limitations. More significant improvements, however, call for a fundamental redesign of the blockchain paradigm.

This week we published a pre-print of our new Chainspace system—a distributed ledger platform for high-integrity and transparent processing of transactions within a decentralized system. Chainspace uses smart contracts to offer extensibility, rather than catering to specific applications such as Bitcoin for a currency, or certificate transparency for certificate verification. Unlike Ethereum, Chainspace’s sharded architecture allows for a ledger linearly scalable since only the nodes concerned with the transaction have to process it. Our modest testbed of 60 cores achieves 350 transactions per second. In comparison, Bitcoin achieves a peak rate of less than 7 transactions per second for over 6k full nodes, and Ethereum currently processes 4 transactions per second (of a theoretical maximum of 25). Moreover, Chainspace is agnostic to the smart contract language, or identity infrastructure, and supports privacy features through modern zero-knowledge techniques. We have released the Chainspace whitepaper, and the code is available as an open-source project on GitHub.

System Overview

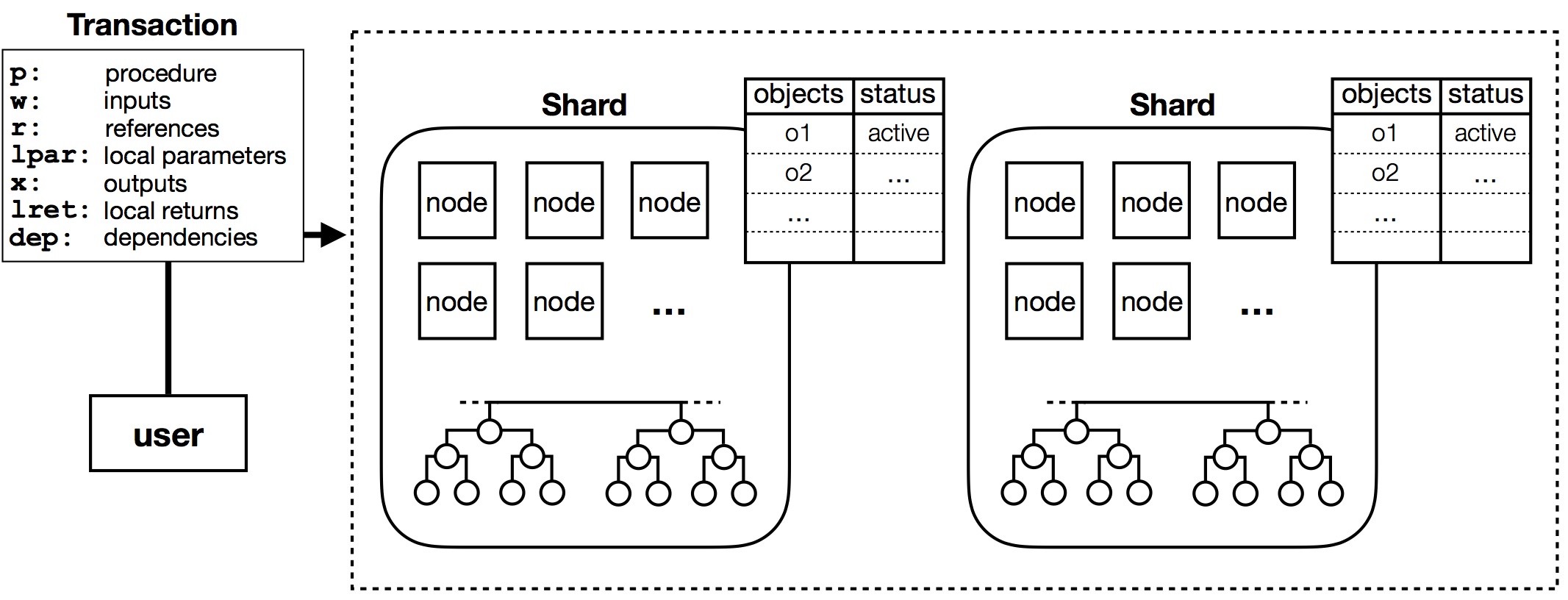

The figure above illustrates the system design of Chainspace. Chainspace is comprised of a network of infrastructure nodes that manage valid objects and ensure that only valid transactions on those objects are committed. Let’s look at the data model of Chainspace first. An object represents a unit of data in the Chainspace system (e.g., a bank account), and is in one of the following three states: active (can be used by a transaction), locked (is being processed by an existing transaction), or inactive (was used by a previous transaction). Objects also have a type that determines the unique identifier of the smart contract that defines them. Smart contract procedures can operate on active objects only, while inactive objects are retained just for the purposes of audit. Chainspace allows composition of smart contracts from different authors to provide ecosystem features. Each smart contract is associated with a checker to enable private processing of transactions on infrastructure nodes since checkers do not take any secret local parameters. Checkers are pure functions (i.e., deterministic, and have no side-effects) that return a boolean value.

Now, a valid transaction accepts active input objects along with other ancillary information, and generates output objects (e.g., transfers money to another bank account). To achieve high transaction throughput and low latency, Chainspace organizes nodes into shards that manage the state of objects, keep track of their validity, and record transactions aborted or committed. We implemented this using Sharded Byzantine Atomic Commit (S-BAC)—a protocol that composes existing Byzantine Fault Tolerant (BFT) agreement and atomic commit primitives in a novel way. Here is how the protocol works:

- Intra-shard agreement. Within each shard, all honest nodes ensure that they consistently agree on accepting or rejecting a transaction.

- Inter-shard agreement. Across shards, nodes must ensure that transactions are committed if all shards are willing to commit the transaction, and rejected (or aborted) if any shards decide to abort the transaction.

Consensus on committing (or aborting) transactions takes place in parallel across different shards. A nice property of S-BAC’s atomic commit protocol is that the entire shard—rather than a third party—acts as a coordinator. This is in contrast to other sharding-based systems with cryptocurrency application like OmniLedger or RSCoin where an untrusted client acts as the coordinator, and is incentivized to act honestly. Such incentives do not hold for a generalized platform like Chainspace where objects may have shared ownership.

Continue reading Chainspace: A Sharded Smart Contracts Platform