The increasing availability of location and mobility data enables a number of applications, e.g., enhanced navigation services and parking, context-based recommendations, or waiting time predictions at restaurants, which have great potential to improve the quality of life in modern cities. However, the large-scale collection of location data also raises privacy concerns, as mobility patterns may reveal sensitive attributes about users, e.g., home and work places, lifestyles, or even political or religious inclinations.

Service providers, i.e., companies with access to location data, often use aggregation as a privacy-preserving strategy to release these data to third-parties for various analytics tasks. The idea being that, by grouping together users’ traces, the data no longer contains information to enable inferences about individuals such as the ones mentioned above, while it can be used to obtain useful insights about the crowds. For instance, Waze constructs aggregate traffic models to improve navigation within cities, while Uber provides aggregate data for urban planning purposes. Similarly, CityMapper’s Smart Ride application aims at identifying gaps in transportation networks based on traces and rankings collected by users’ mobile devices, while Telefonica monetizes aggregate location statistics through advertising as part of the Smart Steps project.

That’s great, right? Well, our paper, “Knock Knock, Who’s There? Membership Inference on Aggregate Location Data” and published at NDSS 2018, shows that aggregate location time-series can in fact be used to infer information about individual users. In particular, we demonstrate that aggregate locations are prone to a privacy attack, known as membership inference: a malicious entity aims at identifying whether a specific individual contributed her data to the aggregation. We demonstrate the feasibility of this type of privacy attack on a proof-of-concept setting designed for an academic evaluation, and on a real-world setting where we apply membership inference attacks in the context of the Aircloak challenge, the first bounty program for anonymized data re-identification.

Membership Inference Attacks on Aggregate Location Time-Series

Our NDSS’18 paper studies membership inference attacks on aggregate location time-series indicating the number of people transiting in a certain area at a given time. That is, we show that an adversary with some “prior knowledge” about users’ movements is able to train a machine learning classifier and use it to infer the presence of a specific individual’s data in the aggregates.

We experiment with different types of prior knowledge. On the one hand, we simulate powerful adversaries that know the real locations for a subset of users in a database during the aggregation period (e.g., telco providers which have location information about their clients), while on the other hand, weaker ones which only know past statistics about user groups (i.e., reproducing a setting of continuous data release). Overall, we find that the adversarial prior knowledge influences significantly the effectiveness of the attack.

But, why is this type of inference detrimental to user privacy? For starters, membership inference can be just the first step towards other privacy attacks on aggregate locations like trajectory extraction or user profiling. Also, an aggregate location dataset might actually relate to a group of individuals sharing a sensitive characteristic (e.g., imagine that a hospital or a city authority publishes mobility patterns of users with a certain disease or income for research purposes), thus inferring a user’s presence in the dataset implies learning another attribute about her (i.e., the fact that she suffers from the disease or her income). On the bright side, membership inference can be used by service providers to evaluate the quality of privacy protection of an aggregate dataset before releasing it to third-parties, or by regulators to detect possible misuse of the data.

An Experimental Evaluation on Two Transport Datasets

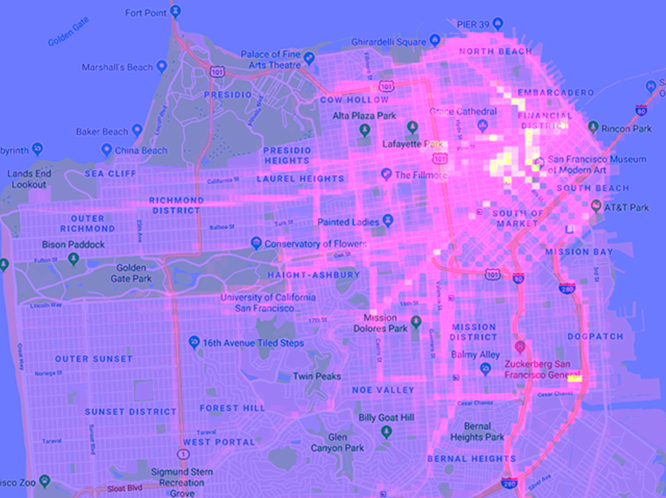

We used two real-world mobility datasets to capture diverse mobility patterns: trajectories generated by taxis in the area of San Francisco, and check-ins at stations by commuters in London’s transportation network. The two datasets have different characteristics as GPS trajectories are dense, i.e., taxis generate lots of data points as they move around the bay area, while commuting patterns have are highly repetitive since Londoners typically commute to their workplaces during the week.

To launch membership inference attacks, we followed a “sample and aggregate” methodology with respect to adversarial prior knowledge. We first sampled groups of users that included a target victim and groups that excluded her. Then, we aggregated the location data of each group and we fed them along with the corresponding label (i.e., whether the victim’s data was included or not) to a classifier that learned how to distinguish between the two cases. We tested the classifier’s performance on aggregate locations of groups that were not used for training and calculated its Area Under the Curve (AUC) score, demonstrating its probability in distinguishing if the victim’s data was present or not.

We evaluated the attack’s performance against an adversary who knows the location data for a subset of taxis in the San Francisco database and attempted to infer membership against aggregates computed over a 1 week period. Our classifier could achieve up to 0.8 AUC, which corresponds to high privacy loss. Similarly, we evaluated the attack against an adversary who knows past statistics about the groups over which aggregation is performed on the commuter database and found that membership inference was also very successful (AUC of 1.0).

Overall, our evaluation showed that aggregate location time-series are prone to membership inference attacks. Among the factors that we identified to affect the attack’s performance are the adversarial prior knowledge, the number of users contributing to the aggregation, the aggregation time frame (length and semantics), as well as dataset mobility characteristics. Regarding the latter, we found that in weaker adversarial settings, regular commuting patterns, like always taking the same route to work on weekdays, are more prone to membership inference than irregular GPS trajectories.

Defenses?

We then examined potential defenses one could use to mitigate the membership inference attack. In particular, we focused on mechanisms that achieve the guarantees of differential privacy (DP). Differential privacy ensures that a query does not reveal whether any one person is present in a dataset. Suppose you have two identical datasets, with the exception of one particular user: DP allows you to add noise to the datasets so that the probability a query will produce a certain result is almost the same as if it’s done on either dataset. In other words, if a user’s data doesn’t really affect the result of a query, then it is unlikely that the query contain sensitive information about that user.

We experimentally evaluated commonly used DP mechanisms as well as specialized ones that were originally proposed for time-series settings. Typically, DP mechanisms perturb the answers of a computation (i.e., the aggregate location counts in our case) with noise drawn from a properly configured statistical distribution and output the noisy answers to protect the privacy of individual users. To this end, our experiments captured from a practical perspective the privacy protection that one could get by employing such mechanisms as well as the utility loss stemming from the release of noisy data.

Overall, our results showed that while DP thwarted membership inference attacks on aggregate location time-series, the utility loss was non-negligible. More precisely, we found that non-specialized mechanisms do not apply well in this setting as they introduce huge amounts of noise. On the other hand, mechanisms specifically proposed for time-series settings yield much better utility at the cost of reduced privacy, indicating that obtaining an optimal privacy/utility trade-off is a challenging task. To this end, we are hopeful that our results will motivate the research community to propose novel mechanisms balancing privacy and utility for analytics tasks on aggregate location data more efficiently.

Participating in the Aircloak Challenge

Aircloak offers a data anonymization product that aims for strong anonymity while providing high-quality data analytics. They have started a series of bounty programs as a means to discover weaknesses in their algorithms and continuously strengthen their product’s anonymization. The first 6-month bounty program, called the Aircloak Attack Challenge, was launched in late 2017.

Challenge participants (“attackers”) were given access to an SQL interface that allowed them to query a raw database through Aircloak’s anonymization layer, Diffix. The latter accepts SQL queries and returns to the analyst anonymized answers by suppressing answers that do not aggregate enough users, and by adding noise based on both the semantics of the query and the contents of the answer. The details of the anonymization technology as well as the noise addition mechanisms were described in the Extended Diffix paper. Challenge participants were allowed to perform an unlimited number of queries to various databases (including census, location, and banking ones) through Aircloak’s system. The ultimate goal was – using some amount of prior knowledge – to perform one of the following type of attacks:

- Singling out: where the aim of an attacker is to determine with high confidence that there is a single user with certain attributes

- Inference: an attacker that knows some attributes about a user aims at inferring some unknown attributes about her

- Linkability: where an attacker, given a linkability (i.e., publicly known) and a protected database, attempts to infer if a user of the linkability database is also in the protected one.

These types of attacks are described in EU article 29 Data Protection Working Party Opinion 05/2014 and are currently considered by the European Union as major risk factors against anonymization technologies.

To evaluate an attack’s performance, the Aircloak Challenge rules described a few scores:

- Confidence captures the likelihood that an attacker’s claim is correct

- Confidence improvement is the increase of the attacker’s confidence over a baseline statistical guess

- The ratio of information learnt by an attack vs. the amount of prior knowledge required for it, is its effectiveness score.

The combination of an attack’s effectiveness and confidence improvement would determine the bounty a participant would be awarded.

In January 2018, we entered the challenge since our research around membership inference on location aggregates sounded like a good match for the linkability attack described in the challenge’s rules. In particular, we employed the location database provided by Aircloak – containing taxi trip records in the city of New York over 1 day – which was split in a public and a protected one. Half of the users in the public database were also in the protected one (and each database had full taxi records), while the other half in each database were unique to that database. The public database represented open public knowledge, and the goal of the attack was to determine whether users in the public database were also in the private database. To this end, we adapted our machine learning methodology to perform the linkability attack employing the ideas described next.

Similar to the Knock Knock paper, the core idea was to train a classifier that learns to distinguish whether a user’s data is present or absent when querying a spatio-temporal database for the number of users in a specific location at a certain time. To this end, we first selected a sample of taxi drivers from the linkability database to work with. Then, we defined our spatio-temporal setting, i.e., our queries focused on an area over New York’s Manhattan and a few epochs over the one day’s worth of data in the NYC taxi database.

However, to confront the challenge we had to adapt our “sample and aggregate” methodology (described earlier). In particular, we had to model two types of noise during the classifier’s training. The first type of noise was the absence of some users in the linkability database from the protected one. Due to the way the original taxi database was split in the linkability and protected parts, some of the users that we would include in the training groups on the linkability part might not be present when testing on the protected one. The second type was the noise introduced by Aircloak insights in the answers of our spatio-temporal queries. To model the first type of noise we trained – as a pre-processing step – a classifier that would learn roughly how many of the users that we sampled from the public database were also present in the protected one. We would utilize this classifier’s output to form the queries for the individual users that we would attack next. To model the second type of noise we had to query the linkability database through Aircloak’s Diffix, i.e., we trained each user’s classifier on the noise that Aircloak’s system adds.

The challenge required that attacks be executed over at least 100 users. To evaluate the maximum effectiveness of our linkability attack, we executed it targeting the top 100 users (taxi driver ids) in terms of taxi trips in the public database (note that users with fewer trips would be harder to attack). For each user, we launched towards Aircloak’s system approximately 21,600 queries to train the model and 10,800 to test it. Out of the 100 users that we attacked 62 were present in the protected database and our classifier successfully linked 50 of them making a total of 51 claims. This gave us a confidence score of 0.98 and a 95% confidence improvement over a statistical baseline. Aircloak performed further evaluations to verify our attack, and found that on 100 users chosen uniformly at random the confidence improvement was roughly 50%. The attack’s effectiveness score was not clearly defined in the case of linkability attacks, nonetheless, Aircloak decided to award us with the full bounty of $5,000.

Aircloak thanked us for participating in their challenge and let us know that our attack was useful and insightful for their quest towards improving their anonymization system. In particular, Felix Bauer, Aircloak’s co-founder said “Many thanks also from our side for your contribution! We feel very lucky to have you take a crack at our system!”. Furthermore, their recent statement indicates that fixes for our linkability attack are in place, and we are excited and eager to know how they defended against it. Finally, we are confident that open and transparent initiatives for anonymization, similar to that by Aircloak, are important for the improvement of privacy-enhancing technologies as well as their wider applicability in industrial settings.

Looking Ahead

As part of future work, there are a few interesting research directions to explore. One of them is obtaining a deeper understanding of membership inference attacks on the setting of aggregate location time-series. To this end, we believe that the evaluation of the attack’s performance on datasets with diverse mobility patterns combined with extensive feature analysis can provide novel insights about the core reasons behind its success. Furthermore, the insights we obtained can pave the way to the design of defenses that limit the power of membership inference without completely sacrificing utility. In fact, we need measurements that study the privacy/utility trade-off between membership inference and specific analytics tasks on aggregate location data. Such studies can shed light on which type of defenses can be useful for what kind of applications. While there might not be a defense suitable for any type of application, we are hopeful that the research community can contribute defenses that thwart membership inference while optimizing utility for specific analytics tasks.

Thanks to Paul Francis, Carmela Troncoso, and Emiliano De Cristofaro, for providing useful feedback and comments for this blog post.